D3D中的光照(1)

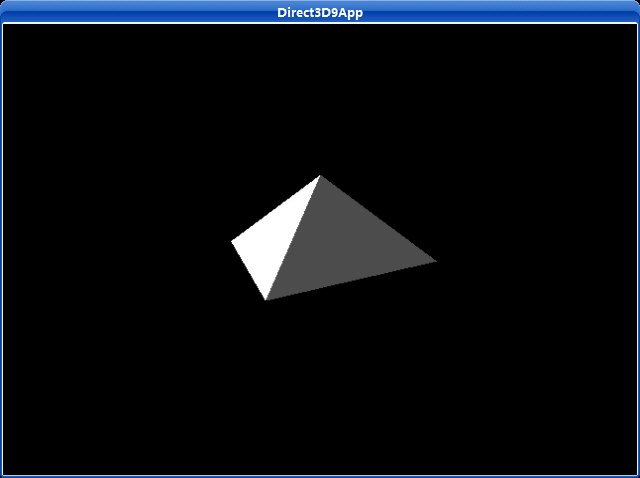

为了提高场景的真实性,我们可以为其加入灯光。灯光也能帮助表现物体的立体感以及物体的实体形状。当使用灯光时,我们不再自己指定顶点的颜色;Direct3D中每个顶点都通过灯光引擎来计算顶点颜色,该计算是基于定义的灯光资源,材质以及灯光资源关心的表面方向。通过灯光模型计算顶点颜色会得到更真实的场景。

5.1灯光的组成

在Direct3D灯光模型中,灯光是通过灯光资源的三个成员之一来照射的,即有三种灯光。

环境光(Ambient Light)——这种类型的灯光将被其他所有表面反射且被用在照亮整个场景。例如,物体的各部分都被照亮,对于一个角度,甚至穿过不在光源直接照射的地方他们都能被照亮。环境光的使用是粗略的,便宜的,它模仿反射光。

漫反射(Diffuse Reflection)——这种灯光按照特殊方向传播。当它照射到一个表面,它将在所有方向上均匀的反射。因为漫射光在所有方向上都均匀的反射,被反射的光线将到达眼睛而与观察点无关,因此我们不必为观察者考虑。因而,漫射光仅仅需要考虑灯光方向和表面的朝向。这种灯光将成为你的资源中照射的普通灯光。

镜面反射(Specular Reflection)——这种灯光按照特殊方向传播。当它照射到一个表面时,它严格地按照一个方向反射。这将产生一个明亮的光泽,它能在某角度被看见。因为这种灯光在一个方向反射。明显的观察点,必须考虑灯光的方向和表面朝向,且必须按照镜面灯光等式来考虑。镜面灯光被用在物体上产生高光的地方,这种光泽只有在灯光照射在磨光的表面上才会产生。

镜面光比其他灯光类型要求更多的计算;因此,Direct3D提供了一个开关选择。实际上,它默认是被关闭的;要使用镜面光你必须设置D3DRS_SPECULARENABLE渲染状态。

Device->SetRenderState(D3DRS_SPECULARENABLE, true);

每一种灯光都是通过D3DCOLORVALUE结构或者描述灯光颜色的D3DXCOLOR来描绘的。这里有几个灯光颜色的例子:

D3DXCOLOR redAmbient(1.0f, 0.0f, 0.0f, 1.0f);

D3DXCOLOR blueDiffuse(0.0f, 0.0f, 1.0f, 1.0f);

D3DXCOLOR whiteSpecular(1.0f, 1.0f, 1.0f, 1.0f);

注意:在D3DXCOLOR类中的alpha值用在描述灯光颜色时是被忽略的。

5.2材质

在现实世界中我们看到的物体颜色将由物体反射回来的灯光颜色来决定。比如,一个红色的球是红色的,因为它吸收所有的灯光颜色除了红色光。红色光是被球反射回来进入我们眼睛的,因此我们看到的球是红色的。Direct3D通过我们定义的物体材质来模拟这些所有的现象。材质允许我们定义表面反射灯光的百分比。在代码中通过D3DMATERIAL9结构描述一个材质。

typedef struct _D3DMATERIAL9 {

D3DCOLORVALUE Diffuse, Ambient, Specular, Emissive;

float Power;

} D3DMATERIAL9;

Diffuse——指定此表面反射的漫射光数量。

Ambient——指定此表面反射的环境光数量。

Specular——指定此表面反射的镜面光数量

Emissive——这个是被用来给表面添加颜色,它使得物体看起来就象是它自己发出的光一样。

Power——指定锐利的镜面高光;它的值是高光的锐利值。

举例,想得到一个红色的球。我们将定义球的材质来只反射红光吸收其他颜色的所有光:

D3DMATERIAL9 red;

::ZeroMemory(&red, sizeof(red));

red.Diffuse = D3DXCOLOR(1.0f, 0.0f, 0.0f, 1.0f); // red

red.Ambient = D3DXCOLOR(1.0f, 0.0f, 0.0f, 1.0f); // red

red.Specular = D3DXCOLOR(1.0f, 0.0f, 0.0f, 1.0f); // red

red.Emissive = D3DXCOLOR(0.0f, 0.0f, 0.0f, 1.0f); // no emission

red.Power = 5.0f;

这里我们设置绿色和蓝色的值为0,这表明材质反射0%此颜色的光。我们设置红色为1,表示材质反射100%的红光。注意,我们能够控制每种灯光反射的颜色(环境、漫射和镜面光)。

同样假如我们定义一个只发出蓝色光的光源,对球的光照将失败因为蓝色光将被全部吸收而没有红光被反射。当物体吸收了所有光以后,物体看起来就为黑色。同样的,当物体反射100%的红、绿和蓝光,物体就将呈现为白色。

因为手工填充一个材质结构将是乏味的工作,我们添加下列有用的函数和全局材质常数到d3dUtility.h/cpp文件中:

// lights

D3DLIGHT9 init_directional_light(D3DXVECTOR3* direction, D3DXCOLOR* color);

D3DLIGHT9 init_point_light(D3DXVECTOR3* position, D3DXCOLOR* color);

D3DLIGHT9 init_spot_light(D3DXVECTOR3* position, D3DXVECTOR3* direction, D3DXCOLOR* color);

// materials

D3DMATERIAL9 init_material(D3DXCOLOR ambient, D3DXCOLOR diffuse, D3DXCOLOR specular,

D3DXCOLOR emissive, float power);

const D3DMATERIAL9 WHITE_MATERIAL = init_material(WHITE, WHITE, WHITE, BLACK, 2.0f);

const D3DMATERIAL9 RED_MATERIAL = init_material(RED, RED, RED, BLACK, 2.0f);

const D3DMATERIAL9 GREEN_MATERIAL = init_material(GREEN, GREEN, GREEN, BLACK, 2.0f);

const D3DMATERIAL9 BLUE_MATERIAL = init_material(BLUE, BLUE, BLUE, BLACK, 2.0f);

const D3DMATERIAL9 YELLOW_MATERIAL = init_material(YELLOW, YELLOW, YELLOW, BLACK, 2.0f);

D3DLIGHT9 init_directional_light(D3DXVECTOR3* direction, D3DXCOLOR* color)

{

D3DLIGHT9 light;

ZeroMemory(&light, sizeof(light));

light.Type = D3DLIGHT_DIRECTIONAL;

light.Ambient = *color * 0.6f;

light.Diffuse = *color;

light.Specular = *color * 0.6f;

light.Direction = *direction;

return light;

}

D3DLIGHT9 init_point_light(D3DXVECTOR3* position, D3DXCOLOR* color)

{

D3DLIGHT9 light;

ZeroMemory(&light, sizeof(light));

light.Type = D3DLIGHT_POINT;

light.Ambient = *color * 0.6f;

light.Diffuse = *color;

light.Specular = *color * 0.6f;

light.Position = *position;

light.Range = 1000.0f;

light.Falloff = 1.0f;

light.Attenuation0 = 1.0f;

light.Attenuation1 = 0.0f;

light.Attenuation2 = 0.0f;

return light;

}

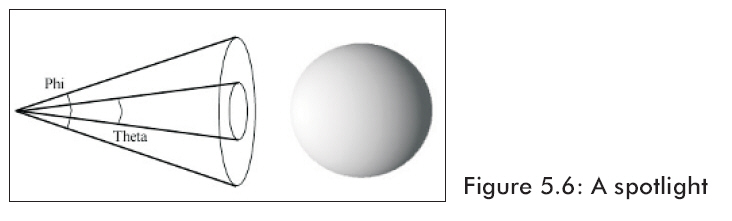

D3DLIGHT9 init_spot_light(D3DXVECTOR3* position, D3DXVECTOR3* direction, D3DXCOLOR* color)

{

D3DLIGHT9 light;

ZeroMemory(&light, sizeof(light));

light.Type = D3DLIGHT_SPOT;

light.Ambient = *color * 0.6f;

light.Diffuse = *color;

light.Specular = *color * 0.6f;

light.Position = *position;

light.Direction = *direction;

light.Range = 1000.0f;

light.Falloff = 1.0f;

light.Attenuation0 = 1.0f;

light.Attenuation1 = 0.0f;

light.Attenuation2 = 0.0f;

light.Theta = 0.4f;

light.Phi = 0.9f;

return light;

}

D3DMATERIAL9 init_material(D3DXCOLOR ambient, D3DXCOLOR diffuse, D3DXCOLOR specular,

D3DXCOLOR emissive, float power)

{

D3DMATERIAL9 material;

material.Ambient = ambient;

material.Diffuse = diffuse;

material.Specular = specular;

material.Emissive = emissive;

material.Power = power;

return material;

}

顶点结构没有材质属性;一个通用的材质必须被设置。设置它我们使用IDirect3DDevice9::SetMaterial(CONST D3DMATERIAL9*pMaterial)方法。

假设我们想渲染几个不同材质的物体;我们将按照如下的写法去做:

D3DMATERIAL9 blueMaterial, redMaterial;

// set up material structures

Device->SetMaterial(&blueMaterial);

drawSphere(); // blue sphere

Device->SetMaterial(&redMaterial);

drawSphere(); // red sphere

5.3顶点法线

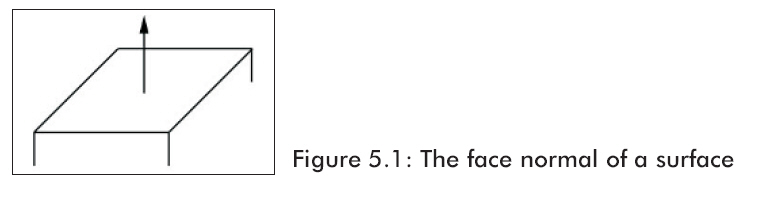

面法线(face normal)是描述多边形表面方向的一个向量(如图5.1)。

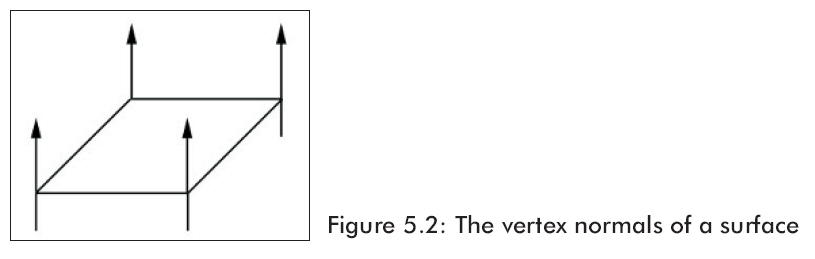

顶点法线(Vertex normals)也是基于同样的概念,但是我们与其指定每个多边形的法线,还不如为每个顶点指定(如图5.2)。

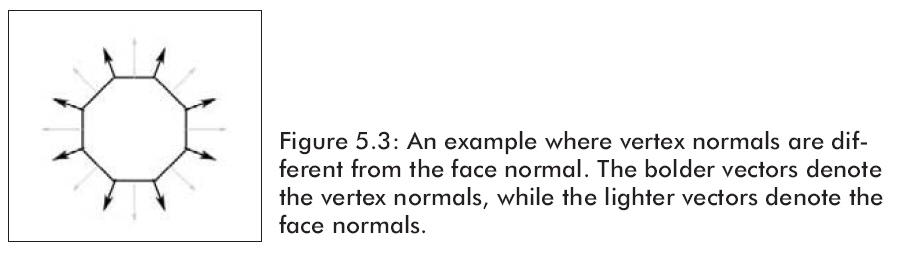

Direct3D需要知道顶点法线以便它能够确定灯光照射到物体表面的角度,并且一旦计算了每个顶点的灯光,Direct3D需要知道每个顶点的表面方向。注意顶点法线不一定和面法线相同。球体/环形物就是很好的实物例子,它们的顶点法线和三角形法线是不相同的(如图5.3)。

为了描述顶点的顶点法线,我们必须更新原来的顶点结构::

class cLightVertex

{

public:

float m_x, m_y, m_z;

float m_nx, m_ny, m_nz;

cLightVertex() {}

cLightVertex(float x, float y, float z, float nx, float ny, float nz)

{

m_x = x; m_y = y; m_z = z;

m_nx = nx; m_ny = ny; m_nz = nz;

}

};

const DWORD LIGHT_VERTEX_FVF = D3DFVF_XYZ | D3DFVF_NORMAL;

作为一个简单的物体比如立方体和球体,我们能够通过观察看见顶点法线。对于更多复杂的网格,我们需要一个更多的机械方法。假设一个由p0,p1,p2构成的三角形,我们需要计算每个顶点的法线n0,n1,n2。

简单的步骤,我们列举它是为了找到由三个点构成的三角形的面法线,同时使用面法线作为顶点法线。首先计算三角形上的两个向量:

p1 – p0 = u